Quality at Pendo: Our Experiences in Ensemble Testing

In this blog post you’ll read about our successes and challenges as we tried our hand at Ensemble (Mob) Testing

At Pendo, we’re always trying to push the boundaries of testing. We believe that incorporating new approaches is key to promoting our quality culture and a quality focused mindset within our teams. We actively promote that everyone is responsible for quality and constantly strive for more collaboration and knowledge sharing to assist everyone on our quality journey. We have always found value in exploratory testing and recently tried two different approaches to enhance and improve our approach to this key testing activity.

Ensemble Testing

Ensemble testing is commonly known as mob testing, however, due to the negative connotations of the word ‘mob’, we are going to refer to the practice as ensemble testing. The word ‘ensemble’ is gaining traction in other published articles, including those referenced below.

Ensemble testing is an evolution of ensemble programming and is defined as “All the brilliant people working on the same thing, at the same time, in the same space, on the same computer.” — Woody Zuill

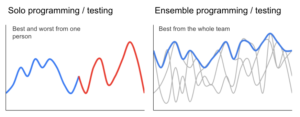

One of the benefits of ensemble work is that you get the best out of the whole team for the entire exercise.

The above graphs show that one coder (or tester) and one reviewer can put their best and worst into their code. However, when a whole team works on the same problem, we get the best of everyone. This example is based on the graphic from: Mob Testing: Experience Report

The Why

We were migrating a major part of our app to a new framework and wanted to make sure we had identified as many areas of risk and impact as possible.

As the quality lead on the project, I wanted to try a different approach to testing that would enable us do this, while aiming to achieve the following goals:

- To try a different method of testing (that would lend itself well to remote working)

- To open new channels and methods for communication and collaboration within the quality team

- To promote knowledge sharing

- To increase test coverage and confidence in areas affected by framework migration (including data)

Ensemble testing brings together a group of people – in our case a group of Quality Engineers – to work together on one feature. Recruiting quality folks from their feature teams ensured that the known critical paths within the product were covered and the expertise that would usually get siloed to one team could be shared and everyone could learn.

With this extra knowledge, we were able to improve the test coverage of our framework migration to include niche areas that my feature team was not yet familiar with.

The Process

I used the Ensemble Programming Guidebook as a reference, which I’ve linked in the resources section at the end of this blog. There are roles (listed below) that get assigned to each person in the group. There is one driver and the remaining participants are navigators. The driver is not allowed to make any decisions. All instructions must come from the navigators and the roles are rotated on a timer. For people new to this idea, the timer is set for around 4 minutes. It’s expected that the time will increase with experience.

The Roles:

Navigator(s)

- Come up with ideas and discuss with the group

- Think about what you could test next to build upon existing ideas

Lead Navigator

- Condense other people’s ideas into one scenario

- Give the driver instructions on how to complete the test scenario

- Make notes about the scenario and the output – these could become regression or automated tests

Driver

- The actor

- No decisions made at the keyboard

- Listen to the Lead Navigator and follow their instructions

The Exercise

I decided to run the exercise in two rounds, mainly so my feature team had a chance to tidy things up before the quality team was brought in.

Round 1 – Feature team

The process was somewhat followed, but we didn’t strictly stick to it. We switched drivers and lead navigators around, but we found it was more effective to have a note taker separate from the Lead Navigator. We separated these roles because we had a few ideas coming from different people and the lead navigator found it difficult to condense and relay information to the driver while taking notes on the scenario at the same time.

We found one major issue, and identified some further areas of risk that required a little more testing.

Round 2 – Quality team

The process evolved into more of a brainstorming session. The Lead Navigator in this round faced the same difficulties as in round 1, and as we were timeboxed to an hour, I wanted to make sure we had as many areas talked about and tested as possible. I became the note-taker and the group used the time to brainstorm each knowledge area to answer: could the code change affect anything here?

We still rotated the driver around, and the group still input ideas, but we were more focused on uncovering possible risks than sticking to the process.

The Results

- Two high severity issues found in round one

- A further three lower severity issues found in round two

- Identified risk areas that we didn’t know about

- Greater collaboration and teamwork while performing the exercise

- Increased product knowledge

We ran a short retrospective after the session and the feelings were mostly positive. Participants all thought it could be a useful exercise with practice and enjoyed talking through different things to test. We could bounce ideas off each other, and gain insights into how other people approach testing. It enforced the idea that working together increases the quality of a feature.

I felt like the exercise improved group working and could build strong cross-team relationships if used often enough. I reached the goals I set for the session, we found bugs and identified the niche areas that needed more test consideration.

Improvements

Participants agreed that it took a while to get into, and the roles needed more clarification. Some felt 4 minutes wasn’t long enough to spend doing each role (but this might vary depending on the complexity of the feature under test). I found the roles were hard to enforce, partly due to there being a lot of information to relay. Also, the documentation given in advance may not have been the best example. Some key suggestions to improve our process for next time included:

- Make the timer longer

- Set up a few sessions with different time slots to encourage more participation

- Define a narrower focus for each session

- Have an example round, find YouTube videos

- Record the session so we can run a longer retrospective

Other applications for this exercise

I think the ensemble technique can be applied to a number of other processes, including (but not limited to):

- Brainstorming and building mind maps of the product

- Focussed testing sessions on smaller changes

- Knowledge sharing

- Programming with your team on one ticket at a time

- Refactoring

- Cleaning up automated tests

- Investigating failing automated tests

Summary

I thought this was a great way to increase knowledge, communication and relations between offices. This exercise increased our confidence in the changes and directly contributed to the upgrade going so smoothly and without any customer reported bugs.

Resources

I spent a lot of time reading the following articles, which are all written by – Maaret Pyhäjärvi